02 Jan How To Stay Safe on Google’s Major Algorithm Updates

It has been almost a decade now since Google set a course towards improving the quality of search. It keeps tweaking and refining its algorithm 500-600 times a year to supply its users with the most relevant and quality search results possible.

And while the majority of these updates are just chicken feed, some are real game-changers. So in this article I’m going to walk you through the history of the most earth-shattering Google algorithm updates as well as provide you with comprehensive what-to-do instructions on how to get prepared for all of them.

Panda

The very first update that changed SEO landscape forever and started white-hat SEO revolution was the Panda algorithm update. In a nutshell, the algorithm targeted sites with low-quality and spammy content and down-ranked them in search results. Back in the day, it had been some sort of filter when in 2016 it deserved being included in Google’s core algorithm.

Watch out for

- Duplicate content (internal and external)

- Keyword stuffing

- Thin content

- User-generated spam

- Irrelevant content

What to do

1. Get the full list of your webpages

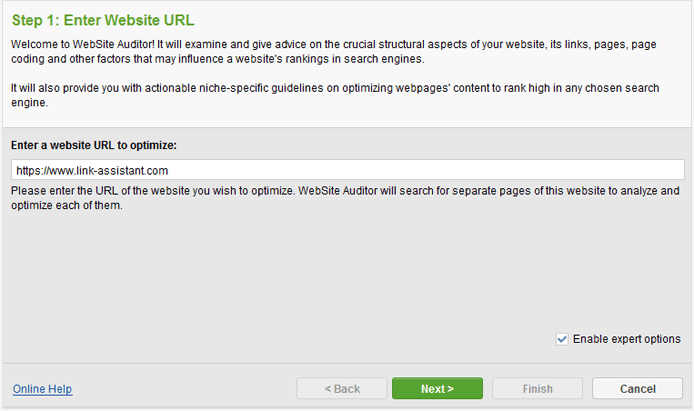

The best way to spot internally duplicated content, thin content, and keyword stuffing is to run regular site audits. And since Panda score is assigned to the whole site — not to separate pages — we need to go through every single page of your site to identify low-quality content that drags your website’s overall quality down. And of course, this can be comfortably done with WebSite Auditor.

- Start the tool and create a new project for your site.

- After that, enter your website’s URL, check Enable expert options, and hit Next.

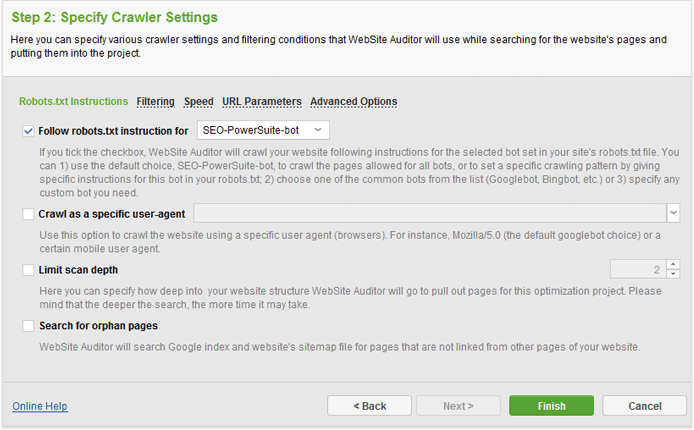

- At the next step, just leave the settings as they are. Follow robots.txt instructions and don’t limit the scan depth so that your entire website gets scanned. Hit Finish and give the software a couple of minutes to collect all your webpages.

2. Look for pages with duplicate content

Now that WebSite Auditor has kindly supplied you with the full list of pages, let’s find those that have internally duplicated content.

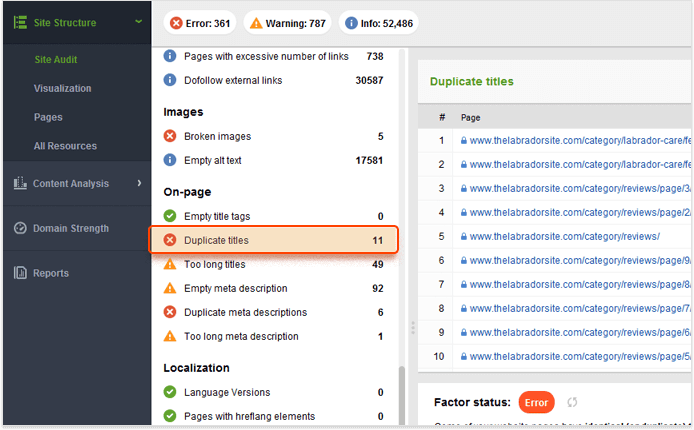

- Move to the Site Audit submodule, which is under Site Structure, scroll a bit down to the On-page section, and hit the Duplicate titles tab.

- Now have a look at the list of pages on the right, go through all of them to spot the ones with identical content.

After that, you may either fix technical issues that caused duplication or fill pages with new content. And don’t forget to diversify their titles and meta descriptions as well. What’s more, you need to watch out for similar-looking pieces of content because it may be a sign of content automation.

3. Check for pages with thin content

Thin content is yet another Panda trigger. In the age of semantic search, Google needs to understand what your page is actually about. And how is it supposed to do it with only few lines of text on your page? Exactly. That’s why Google sees such pages as insignificant and will hardly rank them high in SERPs. So let’s go on a hunt for pages with thin content and fix them until they get you under Panda.

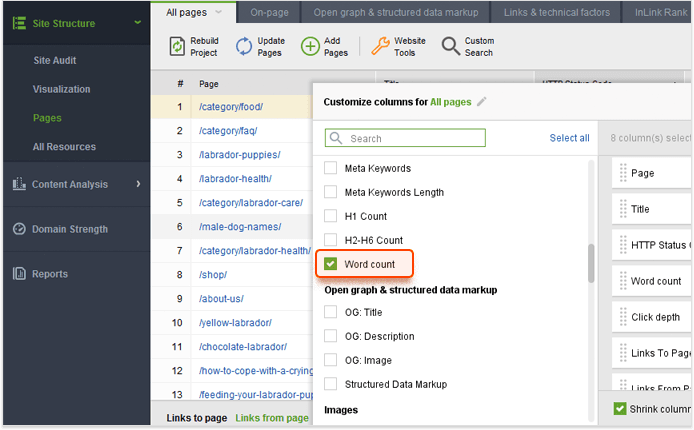

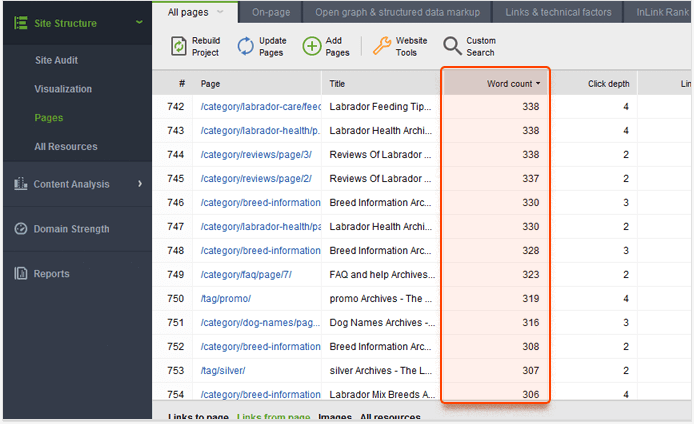

- Move to the Pages submodule, right-click the header of any column, and choose the Word count column to be added to your workspace.

- Now select all your pages and update the column. After that, the tool will show the exact number of words on every single page of your site.

Unfortunately, there are no Google-approved guidelines on how many words a certain piece of content needs to have not to be considered thin. Moreover, sometimes pages with not too many words perform surprisingly well and even get in rich snippets. However, having too many pages with thin content will most probably get you under Panda penalty. That is why you need to investigate pages with less than 250 words and fill them with content where possible.

4. Find pages with externally duplicated content

Google wants every page on the Web to add as much value as possible. And this surely cannot be done with non-unique or plagiarized content. Therefore, it’s a good idea to check your content pages via Copyscape if you suspect they might have external duplication.

It’s also fair to say that some industries (like online shops that sell similar products) simply can’t always have 100% unique content. If you work in one of those industries, there are 2 ways out. You can either try to make your product pages as outstanding as possible or utilize customers’ testimonials, product reviews, and comments to diversify your content. By the way, here is a nice guide on how to make the most of user-generated content.

If you’ve noticed that someone is stealing your precious content, you may either get in touch with webmasters and ask them to remove plagiarized content or submit this content removal form from Google.

5. Check your pages for keyword stuffing

Keyword stuffing is another thing that attracts Panda. In a nutshell, keyword stuffing occurs when a webpage is overloaded with keywords in an attempt to manipulate search results. So let’s quickly check if you overdid some of your pages.

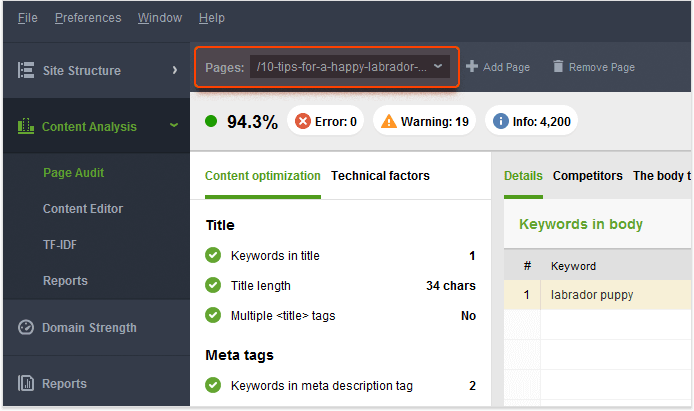

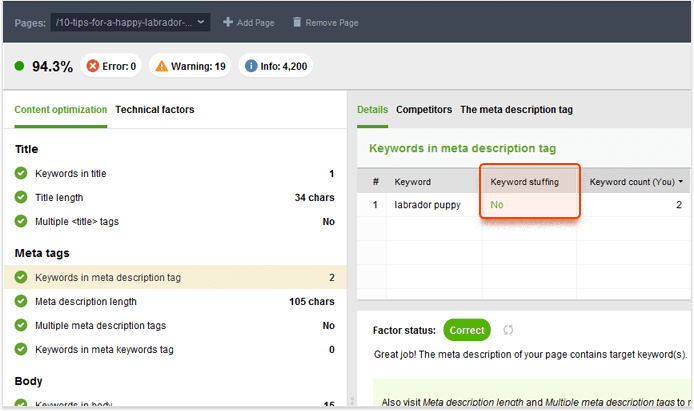

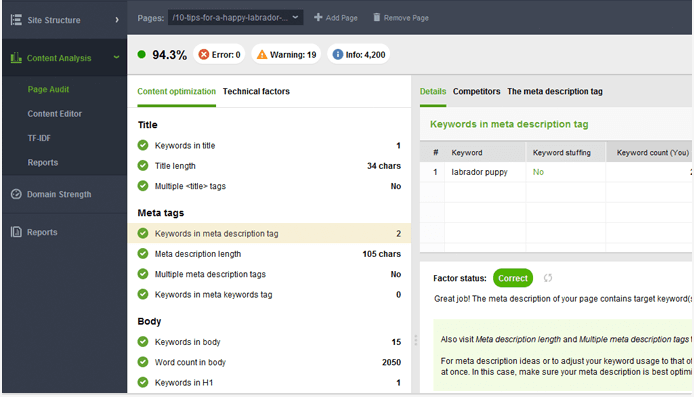

- Move to the Content Analysis > Page Audit and enter the URL of a page that you want to check for keyword stuffing in the Pages search bar in the top left corner.

- Then go through Keywords in title, Keywords in meta description tags, Keywords in body, and Keywords in H1 and have a look at the Keyword stuffing column on the right under the Details section.

Penguin

Right after Panda, in 2012, Google launched another update called Penguin that aimed at down-ranking sites with manipulative, spammy links. The thing is, links has always been one of the most powerful ranking signals for search engines. But back in the day, Google’s algorithm couldn’t determine the quality of links. That’s why for many years SERPs were more like uncontrollable chaos. SEOs would just buy links or use spammy techniques to manipulate search results. Of course, this had to be stopped — so now Penguin works in real-time checking every internal and external link. Just like Panda, Penguin is now part of Google’s core algorithm.

Watch out for

- Links from spammy sites

- Links from topically irrelevant sites

- Paid links

- Links with overly optimized anchor text

What to do

1. Get the full list of backlinks

The very first thing to do when fighting with spammy links is to extract the full list of backlinks pointing to your site. And of course, SEO SpyGlass is here to help us with that. With its new, constantly growing index and super-fast crawling speed, you can be sure the tool collects only up-to-date links.

- So start the tool, create a new project for your site, check Enable expert options, and hit Next.

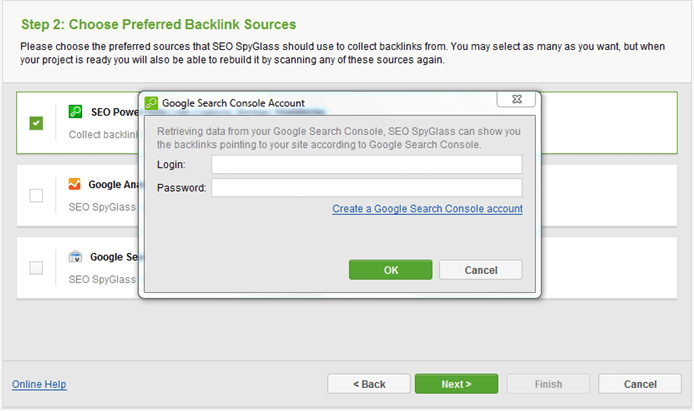

At the next step, the tool will offer you to specify the sources to pull the links from (PowerSuite Link Explorer, Google Search Console, and Google Analytics). I would recommend going for all of them to collect the most comprehensive list of links.

- So just check all three options, log into your Google Analytics and Google Search Console Accounts, and hit Next.

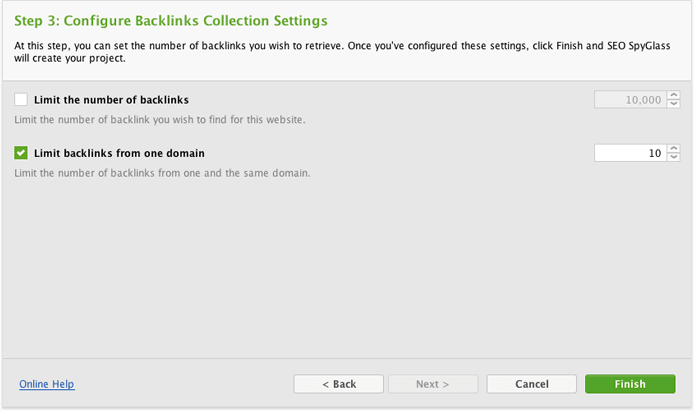

Now you need to decide on the number of links you’re willing to collect. By default, the tool collects 10 links per domain to filter out sitewide links.

- We can leave the settings as they are because, as a rule, it’s more than enough. Hit Finish, and in a couple of moments the tool will supply you with the full list of backlinks.

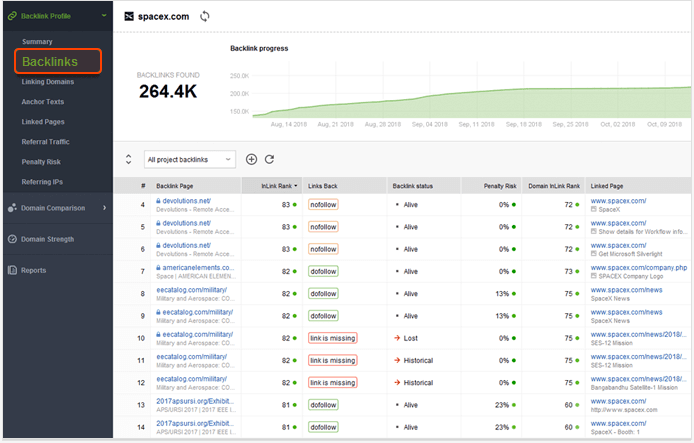

- Now move to Backlink Profile > Backlinks to see all links that point to you at the moment.

2. Identify spammy links

Now that you’ve accessed your backlink profile, we need to identify what links are toxic. The best and the most convenient way to do it is by calculating their Penalty Risks. Penalty Risk is SEO SpyGlass’ own parameter that is based on parameters that characterize backlink profile diversity, the age of linking domain, the amount of link juice each link transmits, and many more.

What’s more, we’re going to calculate your links’ Penalty Risks by linking domains because it happens quite often that many spammy links come from one domain. And this will also considerably speed up the whole process.

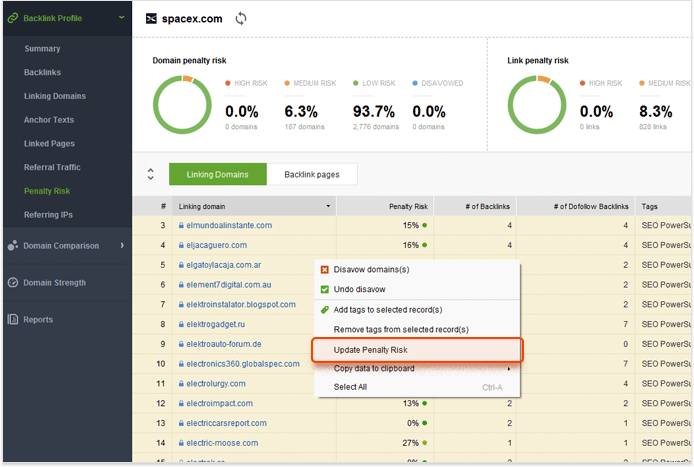

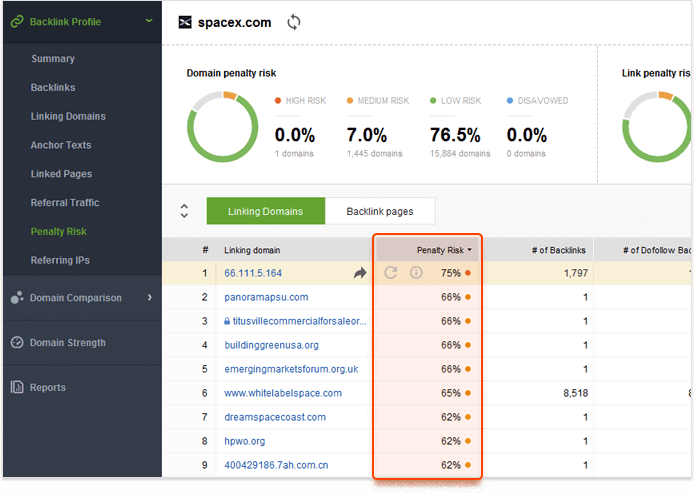

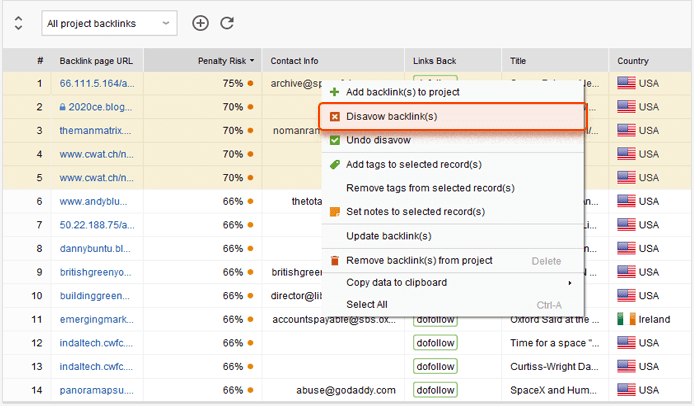

- Move to Backlink Profile > Penalty Risk, select all linking domains, and hit Update Penalty Risk.

- Now we need to sort the domains by their riskiness so that the most risky ones are displayed first. To do that, just click on the header of the Penalty Risk column.

First and foremost, you need to investigate backlinks with Penalty Risks over 70% because these are the ones that can get you under the Penguin penalty. Those that are from 30 to 70% have a bit lower chances to be harmful but also need to be checked. And backlinks with Penalty Risk from 0 to 30% are safe and sound, and most probably bring you a lot of profit.

First and foremost, you need to investigate backlinks with Penalty Risks over 70% because these are the ones that can get you under the Penguin penalty. Those that are from 30 to 70% have a bit lower chances to be harmful but also need to be checked. And backlinks with Penalty Risk from 0 to 30% are safe and sound, and most probably bring you a lot of profit. - So now let’s manually visit the most dangerous pages with Penalty Risks over 70%. To do that, you need to click the arrow next to the domain you want to check and go through this page in your browser.

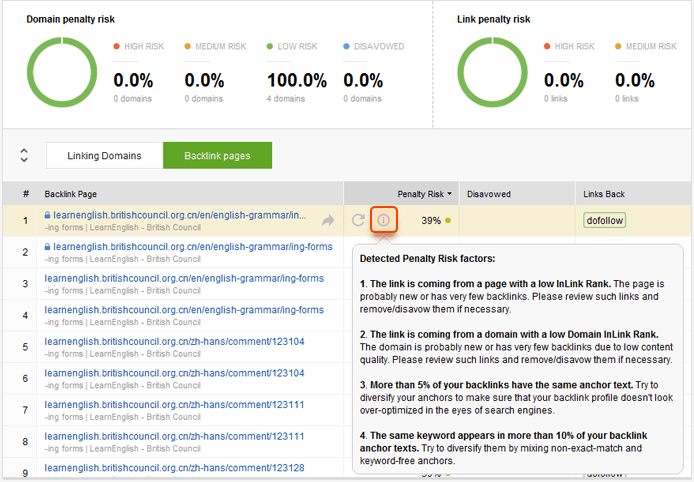

- Now let’s deal with domains with Penalty Risks from 30 to 70% and have a look at factors that caused such a score. Just hit the info button next to the domain and see why it was considered not safe enough.

3. Get rid of toxic links

Now that you’ve checked the most dangerous links and decided which of them you want to get rid of, it’s only right to remove them from your backlink profile for good. One of the possible ways to do that is by contacting webmasters and asking them to remove spammy links pointing to your site.

First of all, we need to make the tool collect emails of these website owners.

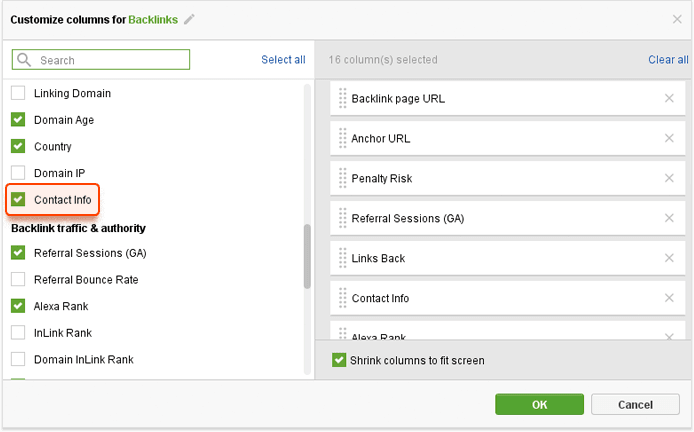

- Stay in the same Backlinks submodule, right-click the header of any column, check Contact Info from the menu, and hit OK.

- At the next step, you need to select the needed links and update the Contact Info column. In a couple of moments you will see website owners’ emails in the separate column.

The only thing left to do is to get in touch with them and ask if it’s possible to take down the links.

4. Disavow spammy links

Unfortunately, it might happen that you won’t hear back from the website owners. If that’s the case, think of disavowing toxic links with the help of Google Disavow tool. This is how Google will understand what links it has to ignore when ranking your site. So at this point, we need to form a disavow file, which now can be done directly in SEO SpyGlass.

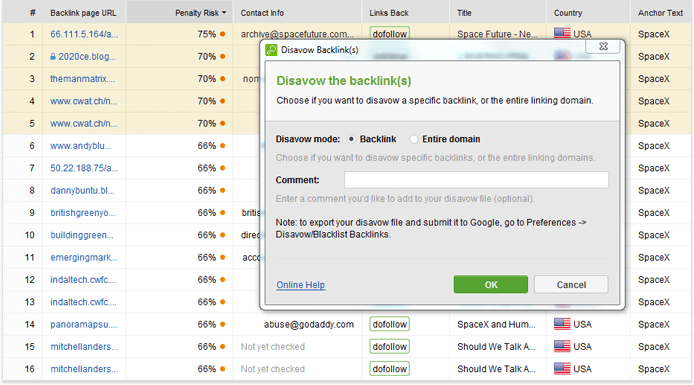

- Simply right-click on the backlinks that you’re willing to disavow and hit Disavow backlinks.

- After that, you need to decide whether it’s a whole domain or just one link that you want to disavow.

Sometimes it may be reasonable to disavow an entire domain. By doing so, you won’t miss other spammy links coming from it.

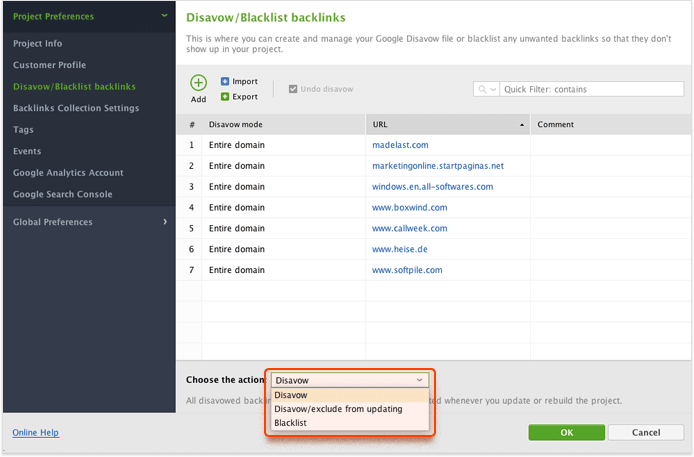

At this stage, you need to decide whether you want to just disavow the backlinks, disavow and exclude them from all your future project updates, or disavow and blacklist them so that they are removed from your project forever.

- So go to Preferences > Disavow/Blacklist Backlinks, choose the action (Disavow, Disavow/exclude from updating, Blacklist), and hit OK.

- Now hit the Export button and save you disavow list on your PC. Then go to Google Disavow tool and submit the disavow file.

Well, that’s how you get rid of toxic links. Make sure to make such audits your routine, and you’ll never be hit by Penguin.

The Exact Match Domain update

In the same 2012, quick-witted SEOs found a way to make poor quality sites rank well in search results. They would simply use the exact match search queries in their domain names. And back in the day, when Google saw a certain query and a domain that matches it 100%, it thought that this site is of the highest relevance and ranked it number 1 in search results. That is why Google had to take some action and introduced the Exact Match Domain update to remove rubbishy sites with exact match domains from Google’s top positions.

Watch out for

- Exact match domains with thin content

What to do

Don’t get me wrong, using exact match domains is totally ok as long as your site is filled with quality content. So the best thing you can do is identify pages with thin content (you already know how to do that) if you have any and fill them with relevant and original content.

Even though an exact match domain won’t get you under a penalty, it also won’t boost your rankings. So if you want to raise your website’s authority, it’s better to invest your time and efforts in quality link building. Luckily, with LinkAssistant, building links is no longer a complicated and time-consuming activity. By using the tool’s 10 different methods of prospect search (Guest Posting, Commenting, Reviews, Forums, etc.), you can get numerous link building opportunities and significantly raise your website’s authority.

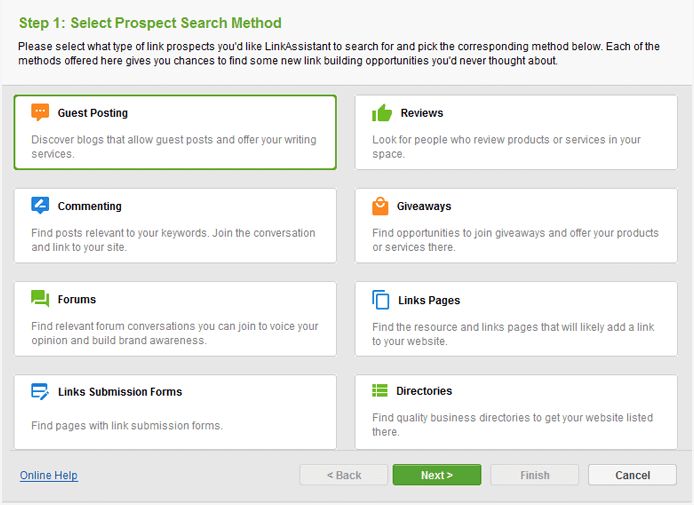

- Just start the tool, create a new project for your site, and enter your website’s URL.

- After that, move to the Prospects module, hit the Look for Prospects button and pick a prospect search method to your liking.

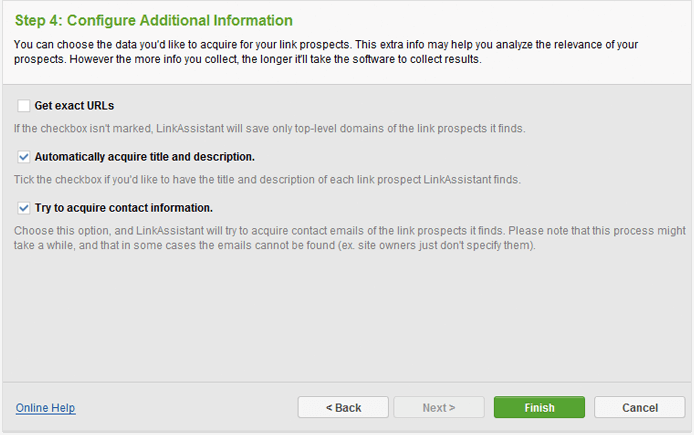

- Then enter keywords relevant to your niche, configure the setting like on the screenshot below, and hit Finish.

After that, you’ll get a big old list of link building opportunities accompanied with email addresses. Just contact website owners and feed your backlink profile with some new links in no time at all. By the way, with the help of a built-in email client, you can even send messages to your link partners without leaving the tool.

Pirate

The only war that Google still hasn’t won is the war against Internet piracy. A decade ago, Internet was flooded with pirated content (movies, music, books, etc.). Of course, that wasn’t right. Google had to respect copyright owners and, therefore, rolled out its next Pirate update. The aim of the update was to down-rank sites that violate copyrights.

Watch out for

- Copyright violations

What to do

1. Proceed with publishing original content

Knowing that Google prioritizes unique content like never before, you just need to go on with publishing it. Make sure you don’t steal someone else’s content. Most probably, Google will notice it and penalize your site. What’s more, scraped content will hardly bring you any link juice, so there’s no point to do that.

2. Ask to remove pirated content

Internet is an extremely crowded place. Therefore, it’s often hard for Google to fight with piracy on its own. So if you’ve noticed that someone steals your content or your competitors use pirated content, don’t hesitate to help Google by submitting a request with the help of Removing Content From Google tool.

Hummingbird/RankBrain

Right after Google established some order in its search results, making more or less quality sites rank higher, it started moving towards understanding search intent. That is why it rolled out Hummingbird in 2013 and RankBrain in 2015. Both of these updates serve for a better understanding of the meaning behind a certain query but perform different functions.

Basically, Hummingbird’s main purpose is to interpret search queries and provide searchers with the results that match their intents best . Earlier on, Google used to look at separate words within a query while figuring out what it is that a user wants. With Hummingbird, these are now the combination of words and context that are taken into consideration.

Speaking of RankBrain, it’s a machine learning system that helps Google process unfamiliar and unique queries. It’s an addition to Hummingbird that is based on historical data and previous user behavior. Simply speaking, RankBrain looks at separate queries, search results that are delivered in response to them, and results that users finally go for. Then it tries to understand the logic behind it to predict the best result for unknown queries.

Watch out for

- Exact-match keyword targeting

- Unnatural language

- Lack of query-specific relevance features

What to do

1. Diversify your content

Like it or not, but the times when using 5 short-tail keywords all over your content was enough are gone for good. If you want your site to rank high in post-Hummingbird era, you need to diversify your content with related terms and synonyms as much as you can.

What’s more, it’s highly advisable to utilize natural language in the form of questions, for instance. This will also raise your chances to compete for a featured snippet.

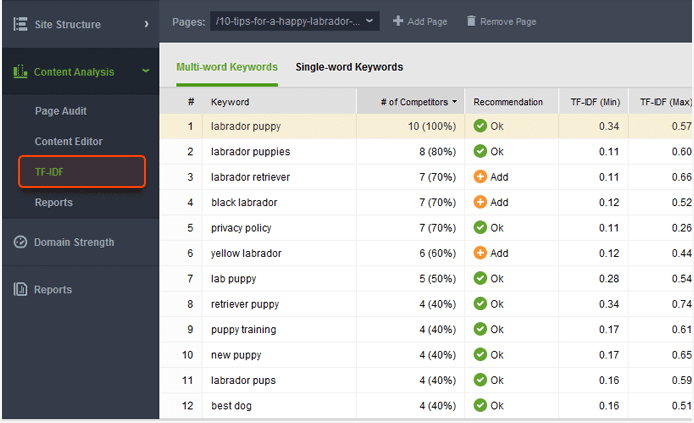

2. Carry out TF-IDF analysis

The very first thing to do when optimizing for Hummingbird and RankBrain is improving your page’s relevance and comprehensiveness. The best way to do it is with the help of a TF-IDF analysis tool that is incorporated in WebSite Auditor. In a nutshell, this method allows you to fetch keywords by analyzing top-10 of your top competitors and collecting keywords that they have in common.

- Move to Content Analysis > TF-IDF, enter a page to analyze in the Pages search bar in the upper left corner, and see how your keyword usage compares to competitors’ on the TF-IDF chart.

Pigeon/Possum

For many years it has been uber difficult for local results to outrank the ordinary ones. That was a bit unfair because local results are a lot more relevant when a user is searching for something nearby. That is why Google has set a course for improving the quality of local search results and rolled out Pigeon in 2016 and Possum two years later.

The main purpose of the Pigeon update was to create a closer connection between Google’s local search algorithm and the main one. So now the same SEO factors are taken into consideration while ranking both local and non-local search results. That entailed considerable boost of local directory sites. The update also created much closer ties between Web search and Google Map search.

In 2016, Google changed local SEO landscape once again with its Possum update. After the update went live, Google started ranking search results based on the searcher’s geographical location. So now the closer you are situated to a company’s location, the more chances you have to see it among your search results. Google also became more sensitive to the exact phrasing of a query. Now even tiny changes in your query may result in completely different results.

Watch out for

- Poor on-page optimization

- Improper setup of a Google My Business page

- NAP inconsistency

- Lack of citations in local directories

- Sharing an address with a similar business

What to do

1. Optimize your pages

Now that the same SEO criteria are used for both local and ordinary search results, the owners of local businesses need to make sure their webpages are properly optimized. The best way to identify weak spots in your on-page optimization is by running an on-page analysis with the help of WebSite Auditor.

- Just move to Content Analysis > Page Audit and look through all the factors. Then spot the ones with Error statuses and fix them. That’s quite easy to do as the tool nicely supplies you with comprehensive instructions.

2. Create a Google My Business page

If you want your site to be included in Google’s local index, creating a Google My Business page is a must. Make sure to categorize your business correctly so that it’s displayed for relevant search queries. It’s also of the greatest importance for your NAP to be consistent across all your listings, so keep an eye on that as well.

3. Get featured in relevant local directories

I guess it’s well understood that it’s much easier for a local business to have their local directory listing rank high in SERPs than to rank among normal search results. What’s more, local business directories saw a significant increase in rankings after Pigeon. So what you need to do is find quality local directories and get in touch with webmasters to ask for a feature. Just open your Rank Tracker project, update rankings, and have a look at the sites that rank on top positions for your keywords. Those will most probably be some relevant local directories.

4. Carry out geo-specific rank tracking

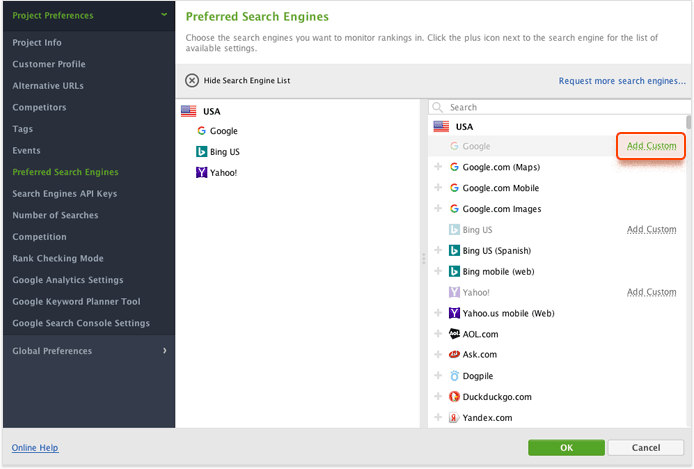

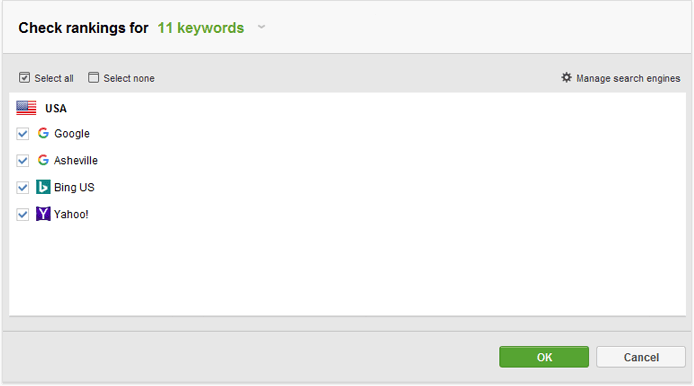

Since search results now depend on the location you check rankings from, it’s only right for local business owners to do geo-specific rank tracking. Of course, RankTracker is here to help you with that.

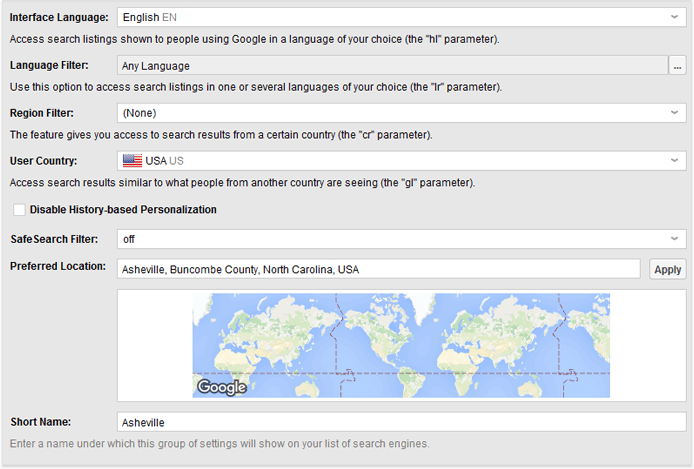

- Go to Preferences > Preferred Search Engines and click the Add Custom button next to Google.

- In the Preferred Location field, type in the name of the city, district, or street address from where you want to check your rankings and give your new target location a short name.

- Now get back to the Rank Tracking submodule, hit Check Rankings, select your preferred location and hit OK.

This can be done for as many locations as you want. For more detailed instructions and advanced advice, please refer to this super cool guide on personalized search.

Fred

The last thing Google ever wants is to deliver websites that are flooded with excessive ads, aggressive monetization, and low value content. What Google does want is to be useful to searchers. Therefore, it rolled out the Fred update, which aimed to penalize sites that provided little profit for searchers and were used mainly for generating revenue.

Watch out for

- Low-value, thin content

- Excessive ads

- Aggressive monetization

- User experience barriers and issues

What to do

1. Look for pages with thin content

Showing ads is totally ok with Google. The only condition is they need to be placed on webpages that are valuable to users. That is why, if you want to stay safe from Fred, the very first thing to do is to audit your site for thin content. I’ve already showed you how it can be done with the help of WebSite Auditor. If you’ve spotted pages that need improving, go ahead and fill them with new, helpful information where possible.

2. Check user experience

There’s hardly anything more frustrating in life than ads that stop you from reaching desired content, is there? Put yourself in your customers’ shoes and self-evaluate your site with the help of these Google Search Quality Rater Guidelines. By doing so, you can also significantly improve your bounce rates because aggressive ads may be the reason why users leave your page.

3. Reconsider your ads

As I already mentioned, placing ads on your site is fine and legit. You just need to look at your ads from aside and make sure they don’t pursue aggressive monetization. Remember that in combination with thin content pages they can get you under Fred.

Mobile Friendly Update/Mobile-first indexing

Google’s obsession with mobile friendliness started with the Mobile Friendly Update (also known as Mobilegeddon) back in 2015. The mission of this update was to up-rank websites that were optimized for mobile devices in mobile search. It’s worth mentioning that desktop searchers were by no means influenced by the update.

After Mobilegeddon, Google started rolling out mobile-first indexing, which aimed at indexing pages with the smartphone agent prior to desktop pages. Basically, mobile-first indexing means that the mobile version of your page will be used for indexing and ranking to help mobile users find what they want. By the way, websites that only have desktop version were indexed as well.

Watch out for

- Lack of a mobile version of the page

What to do

1. Make your website accessible

Sooner or later the majority of websites will be migrated to mobile-first indexing. If you didn’t receive a notification in your Google Search Console account, it means that your website still wasn’t re-indexed. In this light, the only thing you can do is check your robots.txt instructions to make sure that your pages are not restricted from crawling. You need to make them accessible so that when Google bot comes to index your site, nothing stops it.

2. Go mobile/Check mobile friendliness

Now that Google is obsessed with mobile friendliness like never before, adapting your website for mobile devices is a must. There are now lots of mobile website configurations for you to choose from. However, Google highly recommends going for responsive design. If your website is perfectly fine in terms of mobile friendliness, it’s a good idea to examine it with the help of mobile friendly test. By doing so, you’ll make sure that it meets Google’s requirements.

Page Speed Update

Well, this is how we gradually came to the Google’s most recent update, which is Page Speed update. According to this update, page speed has finally become a ranking factor for mobile. It means that faster sites now have more chances to dominate search results.

Watch out for

- Slow loading speed

- Poor technical optimization

What to do

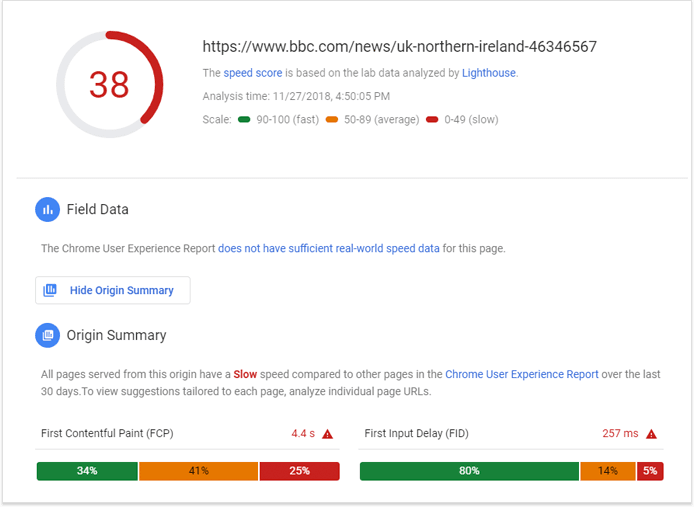

1. Measure your pages’ speed

The very first thing to do when optimizing for the Page Speed update is to understand where you stand with your page speed. The best way to do it is with the help of the Page Speed Insights tool. The tool will evaluate your site on a scale from 0 to 100. The total score is calculated based on two parameters: these are FCP and FID.

- FCP (First Contentful Paint) measures how long it takes for the first visual element to appear.

- FID (First Input Delay) measures the time from when a user first interacts with your site to the time when the browser actually responds to that interaction.

If your website’s page speed score is lower than 90, there’s some room for improving your page speed.

2. Improve your page speed score

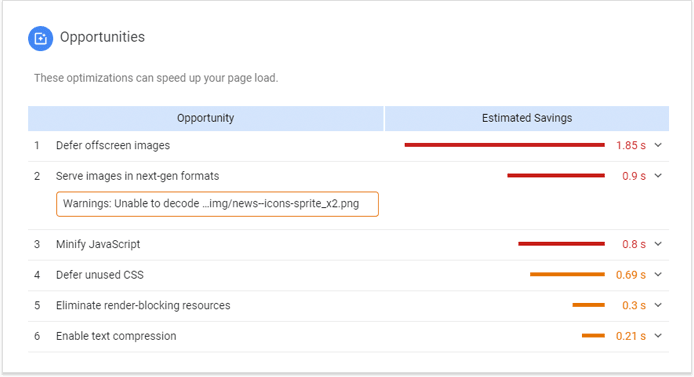

Every time you analyze your page’s speed with Page Speed Insights, the tool gives you some optimization advice by following which you can significantly speed up your page.

Move a bit down to the Opportunities section and have a look at what exactly can be improved. Hit the Learn more button for more comprehensive instructions or ask your webmaster to help you out.

Well, these were all Google’s major algorithm updates of the last decade. As you can see, the SEO landscape has changed a lot and will always be changing. The only thing you can do as an SEO is keep your eyes peeled and optimize for all upcoming updates in time.

I’m always looking forward to you feedback. Tell me about the experience you had with Google algo updates. How did they impact your site and rankings? Let’s recall these good old days together in the comments. And of course, may the force of SEO be with you.

Source

https://www.link-assistant.com/news/google-algo-updates-2018.html